5 Steps to Frictionless AI

It has been a decade since Harvard Business Review called Data Scientist the sexiest career in the 21st Century. The companies who grabbed onto these talents have not only transformed but completely disrupted the status quo in the data & analytics industry. Much of this hype was initially driven by big tech giants (FAANG, or MAANG circa Nov 2021), but there has been an explosion of high-performing AI teams popping out of every corner of the world.

Photo by Tara Winstead from Unsplash, edited by Author.

The reality is that these high-performers are galloping into their $1Bn valuation, leaving the rest of the companies to flounder in the dust they left behind. Many organisations are hiring data scientists, data engineers and others en masse to build data-driven solutions. But without adopting the much-overlooked principles, these AI use cases are stuck on the saddles and never cross the finish line.

Businesses are throwing money at the high-prized data scientists, but this hack ’n slash approach to building and operationalising machine learning solutions is failing. The AI journey is painful, tedious and filled with friction across business, IT and data teams. No one is backing down from this fight because the cost of inaction is much scarier.

So we all soldier on.

Fortunately, it is possible to cut through the weeds and get to the frontline. But to score some relatable brownie points, here are some miseries every successful data team has faced at least once (but most likely all five):

-

Zero-value solutions: The inability to integrate proof-of-concept (PoC) ML solutions into production systems. If a model is not deployed, the bottom line increased without moving the top line. This translates to a negative Return on Investment (ROI), stagnating company financials and unhappy shareholders.

-

Technology barriers: Heterogeneous technology stacks create complex development & production environment, causing teams to take shortcuts and creating technical debt. The “it works on my computer” problem suggests that absence of infrastructure support is still a reality in many data science teams.

-

Lack of cross-functional teams and technical leadership: The lack of strong leadership creates competing priorities between the teams (security vs. speed, cost vs. scale). The incorrect ratio between scientists and engineers and neglect of nurturing cross-functional capabilities result in siloed and inefficient operations.

-

Poor control over data & model: The data used by the machine learning solutions are not well governed, versioned and managed. The fear of not meeting regulatory requirements (such as POPIA, GDPR and HIPAA) debilitate the data teams in moving forward with the use cases. Model degradation resulting from unobserved data and model drift erode stakeholder confidence while concerns around ethical AI pile on.

-

Inadequate performance to meet business needs: The deployed models are often not able to serve the business adequately. The communications gap result in business users and development teams speaking across one another. The non-functional requirements (often linked to infrastructure) are neglected until it becomes too late and painful to rectify.

So how do we channel our inner David and conquer the Goliath that is the giant of enterprise inefficiency?

Grit, commitment and MLOps.

One of the most instrumental changes to the software engineering world that reduced similar friction was the introduction of agile and DevOps practices. The adoption of DevOps principles bridges the gap that once existed between software developers (Dev) and IT operations (Ops) teams, ensuring rapid development and delivery of software applications. DevOps is a set of practices, principles and tools that introduce speed, reliability, scale, collaboration and automation for software engineering. There was a need for something similar, more specific to the machine learning process. Enter MLOps.

MLOps is a set of practices to design, develop, deploy and maintain machine learning solutions in production with high reliability and efficiency. It extends the concept of DevOps to the machine learning development process.

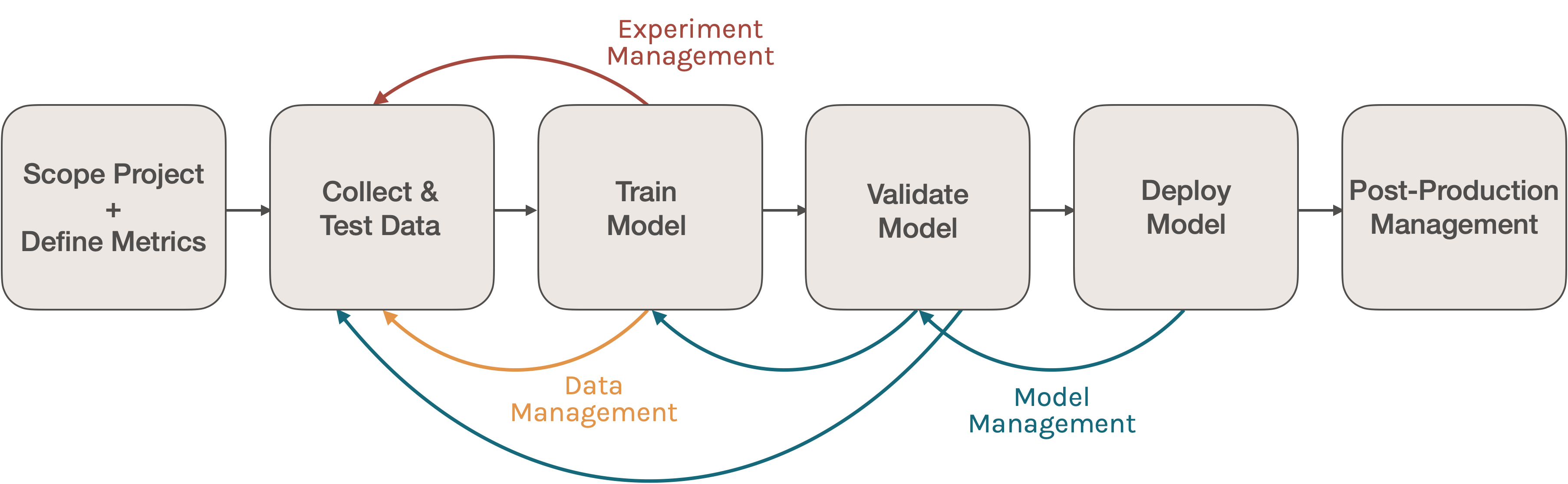

Extending DevOps to the machine learning process. Generated by Author.

There are five goals in MLOps:

- Transparent and traceable governance of data and models

- Improved development and testing practices

- Reproducibility of data pipelines and models

- Automation of deployments and retraining of models

- Monitoring of model performance (data degradation, serving performance, etc.)

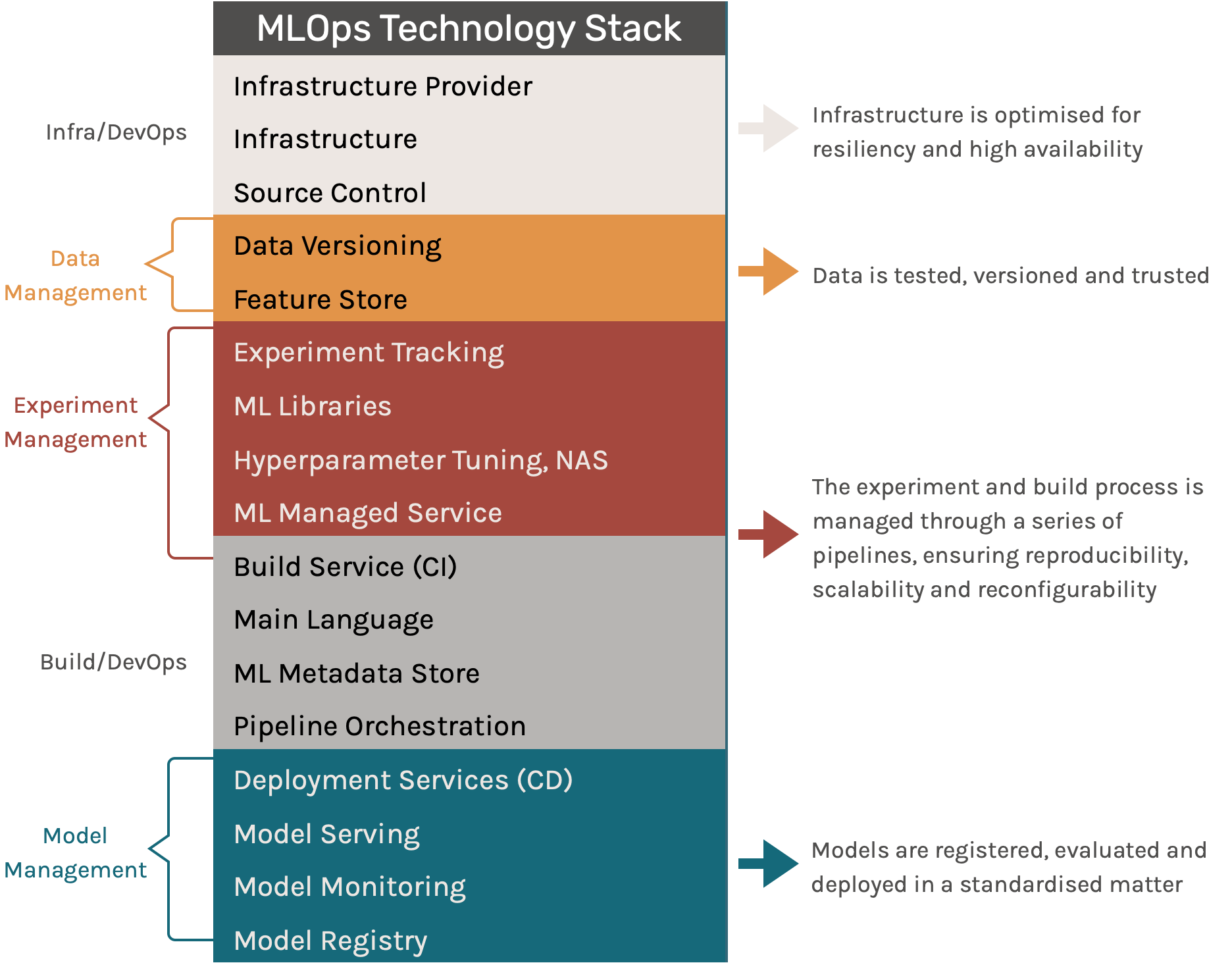

An end-to-end MLOps platform include the following five components:

MLOps Technology Stack. Generated by Author.

The five components are foundational to scalable MLOps, which ensures that:

- The infrastructure is optimised for resiliency and high availability

- The data is reliable, versioned and trusted

- The models are registered, evaluated and deployed in a standardised manner

- The process is managed through a series of pipelines ensuring reproducibility, scalability and reconfigurability

There are many technology providers who market their ability to manage one or more aspects of the MLOps life cycle. They are (probably) all great, but technology is not the answer to MLOps. To create frictionless AI successfully, there needs to be the willingness to collaborate between people, the courage to reinvent the processes, and the innovation to disrupt the technology. And data. Good data.

Once you have clean, accurate, timely data, here are the 6 steps for you to embark on the MLOps journey to frictionless AI:

Step 1: Embed the principles of MLOps first, not the technology

Having a clear MLOps strategy strengthens the business commitment to long-term machine learning investments. MLOps is about bringing people and practices together to solve complex problems. Adoption of principles over technology ensures that everyone is heading to the same destination before the vendor flocks in. Once the 6 principles of MLOps are understood, it will become easier to navigate through the tools and technology labyrinth.

Step 2: Identify and prioritise pain points in your MLOps journey

Take a problem-centric approach to identify the existing pain points in delivering ML solutions to the organisation. Then create a prioritised approach to first address those pain points that will have the highest impact on the organisation. For example, if serving and maintaining different models is the biggest friction, first standardise the serving architecture. If debugging models in production are the most consuming task for your engineers, implement model observability tools to accelerate problem identification. Use this as the driver in the MLOps strategy and execution plan.

Step 3: Build a cross-functional team internally or through credible partners that are technology-agnostic

The machine learning use cases span across multiple teams. Creating an appropriate organisation structure to embed the MLOps team is critical in the success of the use cases. For a team size under 200, centralised platform teams are efficient in integrating infrastructure, data engineering and data science teams. However, as team size grows beyond 200, embedded MLOps teams may be required to accelerate the machine learning life cycle without compromising on organisation architecture and solution integrity.

The rate of technological change means that often not all the technical skills needed are available internally. Technical leaders need to perform a skills gap analysis to identify critical skills shortages as an input into the MLOps strategy. Sourcing external specialists and evangelists is an effective way to accelerate the adoption of MLOps culture.

Step 4: Converge on a technology stack and automate processes to alleviate engineering overhead

Existing technology and tools are likely to be pervasive in the organisation. But these tools can have overlapping features spanning across different cloud providers. The technology leader should take a closer look at consolidating the landscape, thereby reducing the engineering overhead to maintain an array of interdependent tools. A simplified platform can accelerate the onboarding time of a new engineer and a new use case.

Select a strategic provider (AWS, Azure, GCP, Databricks, or better yet, go Cloud-Native) that will enable MLOps for the organisation and define a roadmap to achieve this. Migration to a new technology stack will not happen overnight and should be phased in over a period of time. For example, the ability to start containerising solutions will help to remove infrastructure dependencies in the migration.

Step 5: Design with the end in mind to reduce friction from PoC to production, but plan for iterative development

It is tempting to start writing code and delivering features on Day 1. But the onus is on the leader to ensure enough time is spent upfront in validating the business problem and designing the end solution. A clear path to production combined with the MLOps technology stack allows the ML team to develop and deploy the end-to-end ML solution framework upfront.

An iterative approach can then be taken to enhance the solution with more advanced cleaning, feature engineering and modelling approaches without the strain of environment inconsistencies and deployment failures. The data scientists can focus on what they do best ensuring the best performing models and ROI for the business.

For anyone who came to get a quick fix on commercialising AI solutions, take this as the final note:

There is no silver bullet to Frictionless AI

It all depends on the context of your organisation, the willingness to change and embrace disruption. Start by being empathetic with your users (internal or external), then clear goals will start solidifying. Coupling that with a talented team and strong leadership vision, the power AI/ML can unleash in your organisation will be unprecedented. We just need to work together and strive to build solutions that are reliable, inclusive, and trustworthy.

Thanks for reading! If you want to know more about MLOps deployment problems, please checkout our video from AI Expo Africa . For cloud-native tech and machine learning deployment, feel free to email us at melio.ai.